This is the second part of a three-part article about data compression, with particular focus on media (images, audio and video). It does not require any knowledge of information theory, mathematics or programming. I have tried to use language accessible to anyone without sacrificing the correctness and thoroughness of the information provided.

Use the following links to display each part of the article:

- Part 1 - Lossless data compression

- Part 2 - Lossy compression of stills and audio

- Part 3 - Video compression

When some issues are considered more complex (or less relevant), they will be placed inside a note box. You don't need to read the notes to understand the fundamental principles being described, but you might want to check them out anyway, especially if you noticed a potential problem with some special case of the processes described in the previous paragraph(s).

Binary digit (bit) will sometimes be abbreviated as "b", and bit octet (byte) will sometimes be abbreviated as "B". Those abbreviations might be prefixed by standard SI magnitude symbols like "k" (kilo), "M" (mega) or "G" (giga). So "kb" stands for "kilobit", "kB" stands for "kilobyte", "Mb" stands for "megabit", and so on. When the number is represented using a "binary magnitude" (i.e., using a factor of 1024, instead of 1000), it will use the IEC binary prefixes instead (kiB, MiB, GiB). See the FAQ for more details about this distinction.

In part 1 of this article of this article we looked at several types of lossless data compression algorithms, as well as at some of the techniques that can be used to improve their performance. In part two we're going to look at some of the techniques used in lossy compression (where the data obtained after decompression is not necessarily identical to the original), and how some of those techniques can be adapted to improve lossless compression.

![]() Note...

Note...

Most of the concepts and techniques described in this article are quite complex, and their underlying algorithms would take several pages of software code and / or mathematical formulas to explain fully. For this reason, I have opted for a more abstract approach, explaining how the technique works, rather than how it's implemented by specific programs or file formats. So if this article says "file format X uses this technique", that does not mean it uses only that technique, or that it does not refine that technique (sometimes far) beyond what is described here.

In the first part of this primer I mentioned that it was important to distinguish data from information. Although the term "information" can be used in a mathematical sense, in practical terms we can think of "data" as what the computer manipulates and "information" as what that data means to us. Completely different chunks of data can have virtually identical meanings, and identical chunks of data can mean different things depending on the software used to interpret or display them. For example:

$ 1,000,000.00

Gives us almost exactly the same information as:

One million dollars.

And this sequence of bytes:

[65][66][67]

Will render as:

- "ABC" in a text file

- A sequence of three dark gray pixels (each slightly brighter than the previous one) in an 8-bit grayscale image file

- A single dark gray pixel with a slight blue cast in a 24-bit RGB file

- ...etc.

Or rather, what matters isn't the file itself, it's the software used to load, interpret and display it. Generally, computer programs and operating systems will look at the file name (especially the extension) or the file headers (the data at the beginning of the file) to determine how it should be interpreted and displayed. That is why changing the file extension can make a file (apparently) impossible to open, although none of the data in it has actually been changed. And this is also why one of the main ways to hide information is to disguise it as a different type of data.

But this is an article about data (and information) compression, not cryptology or steganography, so what really matters to us is this: how can we change the representation of a particular piece of information (an image, a song, etc.) so that it takes up as little space as possible?

We tend to think of compressed files as somehow containing the original data. Basic lossless compression techniques do indeed preserve part of the source data, changing only the representation of values or segments that are repeated. But, in practical terms, "compression" is a generic term for a change in the representation of information (i.e., a form of encoding) that takes up less space, and from which the original information can be recovered. This new representation might in fact not contain any of the original data.

For example, if our source data consisted of this:

2 4 6 8 10 12 14 16 18 20 22 24 26 28 30 32 34 ...etc. (up to 1000)

We could represent it instead as the following sentence:

A sequence of the first five hundred even positive integers.

That might not be a highly optimised representation, but it is shorter than the original, it doesn't contain any of the original data in a literal form, and it's easy to "decompress" (assuming our "decompressor" understands the sentence, of course).

Basic RLE and LZ algorithms would be unable to compress the data in that example, because there is no redundancy. But if we create a compressor that looks for that kind of pattern (a linear progression of values) and stores its "description" using some optimised encoding, and if we create a decompressor that can recreate the original data from that description, we might be able to store that information in a much smaller file. For example, the sequence above could also be described as "a sequence of 500 numbers starting with 2 and increasing by 2". And this could be encoded (for example) like this:

IS [2] [500] [2]

Where data copied from the source is shown in blue, compression counters are shown in red and control codes are shown in magenta. In the first part of the article we used a simple marker to toggle between compressed and uncompressed blocks. But if we want our compressor to be able to deal with different types of data patterns (encoding each in a different way), we need to use multiple codes (which I will here identify with a group of letters but, in an actual compressed files, could generally be stored in a single byte.

Upon receiving the data above, our decoder would look at it and say "Aha, an 'IS' code. That means I need to read the next three integers, and then output a sequence starting with the first of those integers, with a total length equal to the second integer, and a step equal to the third integer." And it would recreate our original data exactly (in other words, this is still lossless compression).

This kind of technique, where the compressor looks for, interprets, and encodes patterns of data variation, and not just repeated data, has the potential to achieve much better compression than "simple" compressors (like RLE and LZ), that treat all data the same way. Most real-world LZ-style compressors use some of these techniques, in addition to their "main" compression algorithm. And this is, of course, the basis of prediction and error coding, which we also looked at in the first part of the article. But now we're talking about going one step further. Rather than replacing each value with the difference between a prediction and the real value, we are replacing sequences of pixels with "instructions" on how to rebuild them.

Of course, this is only useful if this kind of pattern occurs in our file; if there are no such sequences, wasting time looking for them will only make the encoder slower. So, where do these kinds of smooth progression occur? You guessed it: in audio and video (including still image) files.

But, while computer-generated files might have perfect progressions (ex., a linear gradient between two colours, or a perfect sine wave in an audio file), files created from "natural" sources, such as photographs, films, video or audio recordings, generally will not. This can be either because the source itself doesn't follow a "neat" rule such as a linear or exponential or logarithmic progression, or because noise was introduced during the acquisition process (ex., video grain).

This greatly reduces the efficiency of our data compression algorithms, and forces us to look more closely at the distinction between data and information.

The following image shows a sequence of 18 pixels (enlarged) with increasing brightness values:

Fig. 1 - Smooth grayscale gradient

Using an 8-bit (0-255) scale, each pixel's brightness differs from the previous one by 15. In other words, their grayscale values are:

[0] [15] [30] [45] [60] [75] [90] [105] [120] [135] [150] [165] [180] [195] [210] [225] [240] [255]

This kind of progression could be compressed easily using the technique described above:

LCS [0] [18] [15]

Where "LCS" represents our code for "linear character sequences" (remember that "character" is a generic name for a digital value with 1 byte, like the ones used in an 8-bit grayscale image; we won't be discussing text compression in this article, so whenever you see a value described as a "character", that means simply that it's stored as a single byte).

This brings the size down from 18 bytes to just 4 (assuming we use byte values for the three parameters, and also a single byte to define the code).

Now, let's say we change the value of the 10th pixel from 135 to 136. Our encoding technique is now unable to describe the complete sequence, but we can still store our data in three "blocks":

LCS [0] [9] [15] S [1] [136] LCS [150] [8][15]

Where "S" represents our code for "simple" or "straightforward" data (followed by the length of the simple data block, which in this case is just one (byte), and then by the value itself, which in this case is 136. The beginning and end of the gradient are encoded as "linear character sequences", as above.

This (11 bytes) is still shorter than the original uncompressed data, but not nearly as good as when the progression was perfect. And if we "jiggle" a few more values slightly, getting this:

[0] [13] [31] [44] [60] [76] [92] [106] [120] [135] [149] [165] [181] [195] [210] [225] [239] [255]

Our encoding technique becomes completely useless... or does it?

Here is our original sequence of pixels:

Fig. 1b - Smooth grayscale gradient

And here is our "jiggled" sequence of pixels:

Fig. 2 - Jiggled grayscale gradient

Can you tell the difference? Let's put them right next to each other:

Fig. 3 - Comparing both gradients

Now can you tell the difference? If you have a good monitor and look very closely, you might indeed notice a difference between the two (especially on the 7th pixel). Would you still notice it if the pixels were shown in their actual size? Probably not, but, even if you did, that is not the real question. The real question is this: do you feel that the two pixel sequences (the perfectly linear one and the "jiggled" one) supply you with different information? If one was replaced with the other, in the middle of an image, do you think that would make any difference to your perception and interpretation of the image?

This is the fundamental idea behind lossy compression: preserving meaning (perceptible information), rather than preserving data (exact digital information). By allowing for some deviation from the source data when encoding particular patterns, lossy compressors can greatly reduce the amount of data required to describe the "meaning" of the source media.

![]() Note...

Note...

If you are familiar with vector graphics, you might notice a certain similarity between this concept and tracing. Tracing is the process that tries to describe the shapes in a bitmap by replacing them with geometric shapes, such as polygons, circles, straight lines or Bézier curves. The result is usually slightly different from the original, but can be saved in a much smaller file. Tracing only works well with relatively simple shapes, though, like drawings and logos. Modern lossy image compressors are especially optimised to deal with natural images, such as photographs.

For this reason, lossy compression can only be applied to information that is meant to be interpreted by a reasonably sophisticated "meaning processor" (ex., a human being, an image recognition system, etc.). In other words, by something that looks at a representation or rendering of the data, rather than at the (digital) data itself.

This means that, in an image file, the information representing the image itself may be encoded using lossy compression, but the file headers and control information (describing the image resolution, number and type of colour channels, etc.) are encoded in essentially the same way as in an uncompressed (or losslessly compressed) image.

Some people assume that the "lossy" in "lossy compression" refers to a loss of quality. This is incorrect. The term "lossy" refers to a loss of information in the mathematical, or digital sense. For example, if we take an image where some pixels have a brightness value of 10 and some have a brightness value of 11, and set them all to the same value (ex., 10), we have lost some information (i.e., the information that allowed us to distinguish those pixels from each other based on brightness). This might not be noticeable by looking at the image (in other words, there was no loss of quality), but some aspects of the source data have been lost and cannot be recovered from the result (the process is not fully reversible).

The term "lossy data compression" can be considered slightly incorrect, since lossy compressors don't really aim to store the original data. The stored ("compressed") data simply allows the decompressor to recreate the original perceptual information. The definitions of "data" and "information" in everyday language are somewhat flexible, so the expression isn't necessarily an error, but, in this article, I will avoid using it, and use just "lossy compression" (of images, of audio, of video, etc.) instead.

Lossy compression algorithms can cause an effect similar to the "generation loss" of analog media. However, contrary to what happens with analog media, simply copying digital data will never cause a degradation, regardless of the compression methods used (unless there is an error during the copy operation, naturally). The "digital generation loss" problem occurs when the data is decompressed and then compressed again, especially if this cycle is repeated several times or if it uses different (lossy) compression settings. Image and sound editing applications will keep the media in its uncompressed form in memory while editing, but if the data is then saved to a lossily-compressed file and opened again (in the same application or in a different one), there is a good chance that its quality will degrade. For this reason, it's preferable to use uncompressed files (or files with lossless compression) during the production process. If the source files are themselves compressed (ex., DV video files) there is no advantage in converting them to an uncompressed format (the detail that was lost during the original compression cannot be recovered), unless they are going to be processed sequentially by two or more applications.

![]() Note...

Note...

Some manufacturers (especially of high-end video equipment) describe their systems as using "visually lossless compression" or "virtually lossless compression". All this means is that their compression system doesn't cause very noticeable degradation on the first generation. It's still lossy compression, and repeated compression cycles can still cause a progressive loss of quality. Real lossless compression systems are guaranteed to preserve the data exactly through any number of recompression cycles.

In the example given in the previous section (with the two grayscale gradients) the maximum deviation between the "perfect" gradient and the "jiggled" one is 2 (on pixels 2 and 7). This is (on a scale of 0 to 255) less than 1%, so it's relatively "safe", from the point of view of preserving the way the image looks, to encode the gradient as "perfect". But what if some pixels had a difference of 5% or 10%? At that point, the "perfect" gradient would probably look different enough from the original to cause a visible degradation in the image, and should be broken down into two or three separate gradients, or encoded in a completely different way.

So, exactly how far should our algorithm go before it decides that it can't approximate the source data as a "perfect" gradient? Well, there is no simple answer to that, which is why most lossy compressors let the user adjust the level of compression.

That is one of the fundamental practical differences between lossy and lossless compression. With lossless compression, the algorithm determines how big the resulting file will be. With most lossy compressors, file size can be decreased by allowing the encoder to deviate more from the source data. This is especially useful when transmitting media in real-time over a relatively slow (or variable-speed) connection, such as the internet.

And now a bit of exercise:

How would our simple "gradient encoder" described above actually go about its pattern detection? We've been looking at the grayscale gradient as a well defined "object", starting with black, ending in white, and with exactly 18 pixels, but that is a very specific situation; our encoder would have to be able to look for that kind of pattern in a more generic way.

One way to do that would be to analyze the brightness values one by one, comparing them with a "perfect" gradient of the same length, with a margin of error defined by the user. It's easier to understand if we use an example. Let's say our source data looks like this:

[2] [4] [8] [12] [14] [18] [19] [24] [25] [28] [30]

And our maximum allowed margin of error is 3.

Now, there are different ways to look for gradient-like patterns in that data. Let's look at a couple of them.

The first version of our encoder starts by looking at the first two values to determine the "step" of the gradient. The difference between those two values is 2 (4-2). This means that, in a "perfect" gradient based on those two samples, the next value would be 6, then 8, then 10, and so on. In other words, it would look like this (red numbers indicate predicted values):

[2] [4] [6] [8] [10] [12] [14] [16] [18] ...etc.

![]() Note...

Note...

This is a basic form of prediction, similar to the one described in the previous part of the article. The advantage of this specific technique, compared to more complex pattern detection, is that it can be applied to streaming data, without access to future samples. If you are interested in this subject, look up "linear prediction" and "linear predictive coding" on the internet.

Our encoder now loads the third value from the source data (8) and compares that with the predicted value (6). Is the difference between the two within our margin of error (3)? Yes, the difference is just 2. So the encoder looks at the fourth value in the source data (12). Is it close enough to the expected value (8)? No, the difference is 4, which is more than our allowed margin. So our encoder describes only the first three values, using the notation introduced earlier in this article, like this:

LCS [2] [3] [2]

In other words, a 3-byte long linear character sequence starting with 2 and with a step of 2.

![]() Note...

Note...

This would actually take up more space than storing the original data with no compression, so it wouldn't be efficient; I used a short sequence just to get the point across, in the real world it would only make sense to encode a sequence of values as a 4-byte "linear character sequence descriptor" if the original sequence was longer than 4 bytes, naturally.

It's important to notice, however, that if we decode that "linear character sequence", we'll get this:

[2] [4] [6]

Which is not identical to the original data. But it is within our "approximation threshold".

Our encoder then starts again, from the 4th value onwards. It compares the 4th value (12) with the 5th (14) and determines that the step is 2. This is predicted to continue as:

[12] [14] [16] [18] [20] [22] [24] [26] ...etc.

And so the encoder compares the 6th value of the source data (18) with the predicted value (16). It's within the margin, so it moves on to the next (19). This is also close enough to the predicted value (18), so we move on to the next (24). This is not close enough to the predicted value (20), so our second gradient ends here, and gets encoded like this:

LCS [12] [4] [2]

And so on.

This technique has one fundamental problem: the predicted step between values is defined by the difference between the first two, and never changes. This means that the first two values are always preserved, but it can force us to break down our sequence into multiple sequences if that initial step is different from the step between subsequent values, even though, overall, it would be possible to store them as a single "LCS" with all values within the defined margin. So let's look at a slightly different technique.

This time, instead of predicting the step (that is, the variation between consecutive values) from the difference between the first two values, we'll recalculate it with each step. Here is our source data again:

[2] [4] [8] [12] [14] [18] [19] [24] [25] [28] [30]

And we are still using the same margin (3).

Our encoder could start by looking at the first two values and encoding them as a 2-byte linear sequence. The first value is 2, the number of values is 2, and the step between them is equal to the difference between the first and second value, which in this case also happens to be 2. So we can encode the first two values like this:

LCS [2] [2] [2]

Of course, this is useless (the result is bigger than the source data). But the exact same technique can be used with any number of values.

So let's try to extend that sequence to 3 bytes. This can be done by setting the starting value of the LCS to the first value of the source data (2), setting the size of the LCS to 3 and setting the step to the difference between the first and last values, divided by the number of steps (which is equal to the number of values minus one). In other words, our starting value is 2, the number of pixels is 3 and the step is (8 - 2) / 2, which is 3 (notice how the step has changed from 2 to 3). Our encoder would therefore represent that gradient like this:

LCS [2] [3] [3]

The compressor now decodes that into an actual character sequence (i.e., a sequence of bytes):

[2] [5] [8]

And compares it to the original data. Are all values within the error margin? Yes. The first and last values are identical, and the second value (5) is close enough to the original (4). So our encoder moves on to the fourth value in the source data, and encodes that sequence as:

LCS [2] [4] [3]

![]() Note...

Note...

The actual step value, based on the source data, would be (12 - 2) / 3, which equals 3.333 (with an infinite number of 3s). However, non-integer values cannot normally be represented by a single byte - they need at least two - so here the result was rounded to the nearest integer.

Decoding that, we get this sequence:

[2] [5] [8] [11]

Is each of those 4 values within 3 of the corresponding source values? Yes, it is. So we move on to the fifth value, and get:

LCS [2] [5] [3] = [2] [5] [8] [11] [14]

An so on:

LCS [2] [6] [3] = [2] [5] [8] [11] [14] [17]

LCS [2] [7] [3] = [2] [5] [8] [11] [14] [17] [20]

LCS [2] [8] [3] = [2] [5] [8] [11] [14] [17] [20] [23]

LCS [2] [9] [3] = [2] [5] [8] [11] [14] [17] [20] [23] [26]

LCS [2] [10] [3] = [2] [5] [8] [11] [14] [17] [20] [23] [26] [29]

LCS [2] [11] [3] = [2] [5] [8] [11] [14] [17] [20] [23] [26] [29] [32]

In other words, our new algorithm, despite being slightly more complex and slower (because it needs to rebuild and compare the entire sequence with each step, as well as readjust the step size), was able to encode all the source data as a single "LCS" that still respects our original margin of error.

These are just very simple examples that show how different approaches to pattern approximation can deliver very different results. This is one of the reasons why two files in the same format, generated by two different encoders, can have different sizes for the same quality, or different levels of quality for the same size. Some compressors are optimised for speed, some are optimised to run on very small amounts of memory, and so on (this applies to lossless compressors as well, as was pointed out in the previous part of this article, but with those there is no change in quality, only in the compression ratio, speed, and amount of system resources used).

In the first paragraph of the previous section I wrote that lossy compressors try to preserve meaning (or perceptible information). Using a fixed margin of error (as in the examples above), however, does not take into account the fact that some parts of the source data might carry more meaning than others.

For example, a very low hissing sound in an audio file probably carries less meaning than a loud human voice talking (unless you're a sound engineer, anyway). So wouldn't it be nice if our lossy compressor could decide which parts of the source information are more meaningful, and preserve those more accurately, while allowing for a greater error (to improve compression) on parts that carry less meaning?

Well, computers can't really make those decisions, because they have no idea what humans consider "meaningful", but the people writing compression algorithms and defining compressed file formats can use statistical and physiological knowledge of human perception to shape that software.

Perception refers not only to what our sensory organs detect, but also how our brain process that information. Here are a few facts about our "multimedia input system" (our eyes and our ears):

There are two different types of photoreceptors in our retina: cones and rods.

Rods are sensitive only to the brightness of incoming light. They can "see" in very low light, but they can't distinguish colours and are relatively slow to respond to changes (kind of like an old LCD). Rods are used mainly for night vision and peripheral vision. They are sensitive mainly to cyan and green light (wavelengths between 450 and 550 nm), although they will also pick up some blue, yellow and orange light. They will not, however, report any information about colour (i.e., light picked up by the rods is treated by our vision as monochrome)

Cones are less sensitive to low light, but they can "see" colour and react faster to changes in light. They are concentrated at the center of our retina (an area called the fovea). Cones are divided into three types. The first type is known as "L", "X" or "Red", the second type is known as "M", "Y" or "Green" and the third type is known as "S", "Z" or "Blue". In practical terms, the "green" cone is also somewhat sensitive to blue, cyan, yellow and red, the "red" cone is also sensitive to yellow and blue (but not cyan), and the "blue" cone is also sensitive to cyan.

![]() Note...

Note...

Some studies suggest that some humans (up to 50% of women and up to 8% of men) are born with four types of cones. The fourth cone type appears to be sensitive mainly to yellow light. Since that extra cone is not sensitive to wavelengths outside the normal spectrum, the only effect it has is to make it easier to distinguish between colours in the red - green range (i.e., greens, yellows, oranges, browns and reds). This might explain why men and women often have a different opinion about when something "greenish brown" becomes "brownish green". Or maybe we do that just to annoy each other. If you are interested in this subject (the four cones, not the greenish brown debate), search for the term "tetrachromacy" on the internet.

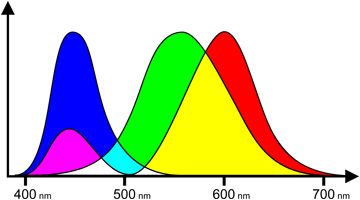

The following image shows the frequency range that each type of cone is sensitive to.

Fig. 4 - Colour sensitivity of cones (by wavelength)

However, we don't have the same number of cones of each kind. We generally have far fewer blue cones than red or green cones. As a result of this (and also of the spectral sensitivity of rods), we are generally more sensitive to green and yellow light, which means we are better able to see details (and subtle changes of colour) in those wavelengths.

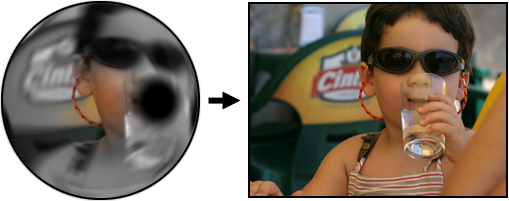

Given the distribution of cones and rods across the retina, we have much better resolution near the center than at the edges. In addition to this, each of our eyes has a "blind spot" where the optic nerve connects to the retina. It's up to our brain to "paint" the fine detail on peripheral areas and the blind spot (by combining the input from both eyes and recent visual memory). Our eyes catch the light, but we see with our minds.

Fig. 5 - Eye input and mental image (simulated)

Image compressors can't really be sure what part of the screen we'll be looking at, so they can't vary the resolution selectively based on the different properties of our retina's regions. But they can take our spectral sensitivity into account when deciding which colours and brightness levels should be preserved more accurately.

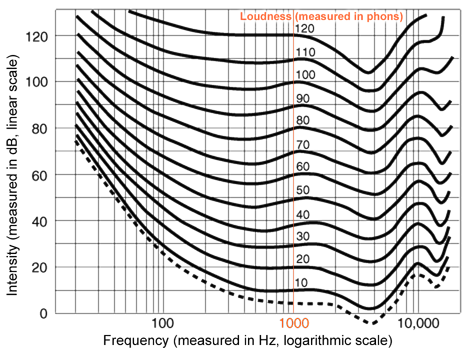

Just as our eyes are more sensitive to certain colours, so are our ears more sensitive to certain sounds. The following figure shows the sensitivity of human hearing to different frequencies, at different sound volumes. It uses a unit called "phon", that measures how loud a sound appears to be, compared to a 1 kHz tone at the same volume, measured in dB (that is why, at 1 kHz, the loudness (phons) always matches the volume (dB). The less sensitive we are to a particular frequency, the higher the volume (dB) must be to appear to have the same loudness (phons). So, the higher the dark line goes, the less sensitive we are to that particular frequency.

Fig. 6 - Human hearing sensitivity

As you can see, humans are especially sensitive to sounds in the 400-5000 Hz range (which is approximately the range of human voice). As the sound volume increases, the perceived loudness of different frequencies becomes more uniform (but we become more sensitive to higher tones, such as those produced by a baby crying). The dotted line is the average frequency sensitivity curve.

![]() Note...

Note...

These curves are determined experimentally, and will vary slightly from one person to another, or as people age. Women have slightly better hearing at high frequencies, and that difference becomes greater with age (ex., a 60 year old woman will generally hear high frequencies as well as a 45 year old man, although she will hear low frequencies worse than a man her age).

This data, along with some extra knowledge about how the brain interprets certain patterns, are used by some lossy compressors to determine which parts of the source information should be preserved more accurately, which can be less detailed, and which can be simply discarded. This is known as "psychovisual" and "psychoacoustic" compression modelling (or simply "psy optimisations").

Even if you're not a video engineer, you've probably seen the terms "YUV", "YPbPr" or "YCbCr" used in documents about video processing (or, failing that, the specifications page of some TV set's manual). Although not exactly identical, all of these refer to a method of colour representation that separates luminance (brightness) from chrominance (hue). One of the uses for that was to make colour TV broadcasts compatible with black and white sets. The luminance channel (Y) kept being transmitted in a way that black and white sets could understand, and the chrominance information (UV) was transmitted on a new frequency, used only by colour sets. This avoided any unnecessary duplication. But, as you may have noticed, colour TV sets use an RGB dot matrix, just like computer monitors. So how does the TV extract the RGB information from the YUV signal?

![]() Note...

Note...

The terms "luma" and "luminance" can mean slightly different things, and the same applies to "chroma" and "chrominance". If you are a video signals engineer you know that. If you're not, you don't need to worry about it. In this article I'll use the terms interchangeably. Luma and luminance refer to the brightness and chroma or chrominance refer to the colour (or hue).

Also, there is a difference between a colour space and a colour model. Essentially, a colour model is an abstract method of describing colours using numbers, while a colour space includes detailed information about viewing conditions and physical properties of the colours being represented. RGB is a colour model, sRGB and Adobe RGB are colour spaces. In this section I will use the term "colour space", since YUV and its derivatives include that information, even if it's not always respected.

Well, instead of looking at the actual colour spaces mentioned above, let's start by using a simplified example which we'll call "YXbXr". Let's say we have a pixel with the following RGB values:

R = 30

G = 100

B = 50

And we want to convert it to our "YXbXr" format.

The first thing to do is calculate the luminance (Y) value of that pixel. In our simplified colour space, that value can be calculated simply as an average of R, G and B. So:

Y = (R + G + B) / 3

Using our values from above:

Y = (30 + 100 + 50) / 3 = 180 / 3 = 60

So our Y value is 60. The Xb and Xr values are, respectively, the blue difference and the red difference. In other words:

Xb = B - Y

Xr = R - Y

Using the values from above, we have:

Xb = 50 - 60 = -10

Xr = 30 - 60 = -30

So our pixel can now be represented as:

Y = 60

Xb = -10

Xr = -30

To recover the original RGB data, the device (or software) processing the data needs only reverse the operation:

B = Y + Xb = 60 - 10 = 50

R = Y + Xr = 60 - 30 = 30

G = 3Y-R-B = 180 - 30 - 50 = 100

YUV, YCbCr and YPbPr (and a few other variations) are based on the same concept described above, but they take into account the human eye's sensitivity to different wavelengths when calculating the luminance (for example, our eyes are more sensitive to green than red, and more sensitive to red than blue), and they also distribute the chrominance values in different ways along the axes.

![]() Note...

Note...

Although this isn't important to understand the concept, here are the formulas normally used to convert between RGB and YUV:

Y = 0.299 R + 0.587 G + 0.114 B

U = 0.14713 R - 0.28886 G + 0.436 B

V = 0.615 R - 0.51499 G - 0.10001 B

And between RGB and (unconstrained) YCbCr:

Y = 0.299 R + 0.587 G + 0.114 B

Cb = 50% + 0.5 B - 0.168736 R - 0.331264 G

Cr = 50% + 0.5 R - 0.418688 G - 0.081312 B

Where "50%" represents the middle of the scale (ex., for 8-bit channels, this would be 128).

YUV is generally used for analog signals, while YCbCr is used for digital data (YPbPr is the analog version of YCbCr). Note that when using YCbCr to represent video (as opposed to still images), it is often constrained so that the values are kept between 16 and 235 (Y) and between 16 and 240 (Cb, Cr). This improves compatibility with analog signal processing equipment.

Some video editing applications list "native YUV operation" as a major "feature". Since most digital video is recorded in YUV (or, to be more precise, YCbCr), working in that colour space means some effects can be calculated faster, which allows the software to do real-time preview rendering. However, some effects can only operate on RGB data, and all it takes is for one of those to be applied to a clip to require a colour space conversion. Some programs sacrifice precision in exchange for speed, in this conversion, which can cause a small loss of quality (typically a very slight posterisation or "combing" effect).

![]() Note...

Note...

Posterisation (that is, the discontinuity of colour gradients caused by an insufficient number of intermediate tones) is called "combing" when it results not from limits of the data format or output medium themselves but from the elimination of some intermediate values from a larger palette (typically due to rounding errors during processing or from "stretching" a small range of values to cover a bigger one, such as when increasing the brightness and contrast of a very dark image). The name comes from the comb-like appearance of the histogram in such images. Combing can usually be detected in an image's histogram before posterisation is obvious on the image itself.

Some image editing software supports a colour mode called CIELAB (or just "Lab") that is similar to YCbCr in the sense that it separates brightness (L) from colour (ab), but, unlike YCbCr (or RGB / sRGB), Lab is based on the characteristics of human visual perception (instead of simple variations of physical properties), and requires higher precision and more complex processing to handle the same images.

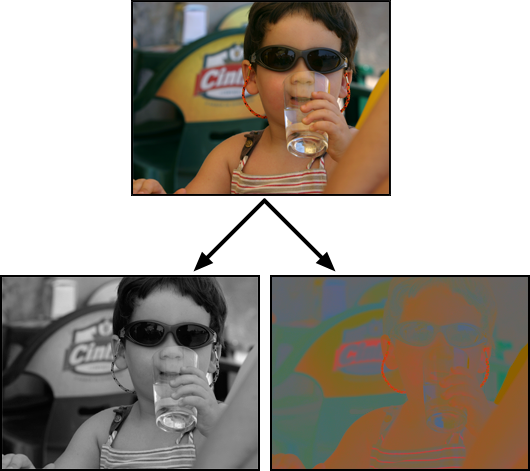

Fig. 7 - Separating luma (Y) from chroma (Cb+Cr)

Assuming there are no rounding errors, our YXbXr colour space is mathematically equivalent to RGB (and so are its "real-world" variants). What's the use of it, then? It has three main advantages:

- It makes our colour data directly compatible with black and white devices (they'll use the Y channel and ignore the rest). Granted, this isn't very important, these days, but can speed up some filters.

- It lets most compression algorithms (including lossless ones) achieve slightly better compression of "natural" images (like photographs), compared to RGB, because luminance and chrominance tend to vary in different ways (see large, nearly "flat" areas in the chroma image, above).

- But, more importantly, this kind of representation (where luminance and chrominance are separate) gives us the possibility to apply a very simple form of lossy compression that requires almost no computational power. It's called...

![]() Note...

Note...

Many sources refer to this concept as "chroma subsampling". However, "subsampling" suggests that a reduced number of samples was taken to begin with, when in fact most images with reduced chroma sampling were captured with full colour information, and downsampled only at the encoding or recording stage. For most purposes, though, you can consider that the terms are equivalent.

You have probably seen the term "4:2:2" used in several video-related documents and even product descriptions. This is a not-very-intuitive way to say that, for every four pixels, there are four luminance samples and two chrominance samples. What this means in practical terms is that, while each pixel in a "4:2:2" image can have its own luminance value, only one chroma sample is stored for each two consecutive pixels. In other words, the colour (or chroma) is "downsampled" (it has less samples than there are pixels).

Fig. 8 - 4:2:2 sampling

You may also have seen the terms 4:2:0 and 4:1:1, especially if you work with DV. 4:1:1 (used by NTSC DV and by both PAL and NTSC DVCPRO) means that, for every segment of 4 pixels, there are four luminance samples and one chrominance sample. 4:2:0 (used by PAL DV, by HDV, and by all DVDs) means that, for every square of 4 pixels (2 by 2), there are four luminance samples and one chrominance sample. 4:2:0 generally results in cleaner-looking images than 4:1:1. 4:4:4 (used by some high-end video formats) means that there are luminance and chrominance samples for all pixels; since that somewhat defeats the point of working in YUV, many 4:4:4 systems work directly in RGB, which is more easily processed by most software (in other words, 4:4:4 sampling refers to "normal" images, where every pixel contains values for the three channels).

Fig. 9 - 4:1:1 sampling

Fig. 10 - 4:2:0 sampling

Fig. 11 - 4:4:4 sampling

![]() Note...

Note...

There are a couple of different variations of these sampling patterns. Sometimes the U and V channels are sampled at the same coordinates, sometimes they are sampled at different coordinates, and sometimes the values are an average of two or four original samples. Since the decompression process can also affect the way chroma information is interpolated, there isn't much point in worrying about these details unless you are writing a codec.

Using a 4:2:2 sampling pattern (and assuming every channel uses the same number of bits per sample) reduces the total amount of data necessary to represent the image by 33% (4:2:0 and 4:1:1 reduce it by 50%) but, thanks to the fact that our eyes are naturally more sensitive to luminance than they are to hue, and to the fact that hue tends to vary smoothly in "natural" images, this reduced sampling has a relatively small impact on our perception of image quality.

![]() Note...

Note...

It does, however, have an influence on how accurately some effects can be applied to the image. For example, a chroma-key effect relies on being able to distinguish the hue of adjacent pixels. The lower the chrominance resolution, the less accurate the chroma-key effect will be. For this reason, some advanced chroma-key filters will also look at the pixel's luminance, and at the surrounding pixels' values, when deciding if a given pixel should be made transparent or not. Ideally, chroma-key shots should be done in a video format that supports 4:4:4 sampling, since that will result in cleaner edges. NTSC DV has a terrible reputation as a source for chroma-keys due to the (up to) 3-pixel "colour bleed" caused by the 4:1:1 sampling pattern.

It's up to the playback device (or software) to decide how to rebuild the full pixel colour information from the 4:2:2 data. It can simply use the same hue for each two pixels, it can interpolate the hue of the second pixel from the two adjacent pixels (left and right), or it can perform any number of "smart" interpolation operations, by looking at the hue and luminance of surrounding pixels. These techniques fall under the generic term of "chroma filtering".

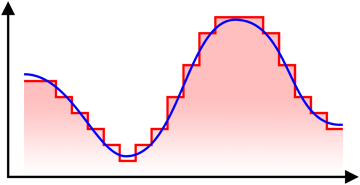

The term "quantisation", as used in the field of signal processing, refers to the division of a continuous signal into discrete steps. For example, when a continuous analog audio waveform is stored by a digital audio recorder, it is typically converted into a sequence of 48 thousand samples per second, where each sample is a 16-bit value that can vary between -32767 and 32767. In other words, the original signal is quantised in terms of amplitude (16 bits) and time (48 kHz).

Fig. 12 - Signal (blue) and quantisation (red)

Higher quantisation means less intermediate steps, and therefore (generally) lower precision and lower quality.

Most lossy compression algorithms involve some form of quantisation. Reducing an image's resolution (ex., from 640x480 pixels to 320x240) would be a form of spatial quantisation, which can be considered as a (very crude) form of lossy compression.

Some people might think of this as a reduction in size, but technically a pixel has no intrinsic "size"; to know the physical size of an image one has to divide its pixel resolution (measured in pixels) by the display or print resolution (measured in pixels per inch or dots per inch, respectively). For example, if an image has 640x480 pixels and a display resolution of 64 pixels per inch, it should be displayed with a width of 10 inches. If the image is downsampled to 320x240 and its display resolution value is changed to 32 pixels per inch, then it should still be displayed with a width of 10 inches. In other words, it retains the same size, but loses spatial definition. In practical terms, most images are displayed on screen using the monitor's display resolution, so the image's own PPI value is ignored. The DPI value (used for printing) however, is usually respected.

Chroma downsampling is a form of selective spatial quantisation, where different channels use different quantisation levels (i.e., the chrominance channels use higher spatial quantisation, making their resolution lower than that of the luminance channel).

Fig. 13 - Chroma downsampling (8x8 quantisation, unfiltered)

In the previous article we saw how the number of different colours in an image affected the probability of repeated patterns, which in turn influenced the efficiency of redundancy-based compression algorithms (like RLE and LZ). Therefore, reducing the number of colours in an image (in other words, quantising its colour palette) can also be seen as a form of lossy compression.

Fig. 14 - Posterisation caused by palette quantisation

The GIF file format, for example, limits the palette of each image to 256 different colours, which means it can encode each pixel into a single byte, and achieve good compression levels using the LZW algorithm. However, the low number of colours forces a loss of information when saving images that were originally in truecolour (24 bits per pixel) format, and can lead to a posterisation (banding) effect. This affects the entire image, but is more noticeable in areas with smooth colour gradients. Dithering can reduce the posterisation effect, but it will also reduce the efficiency of the LZW compression. For that reason, GIF is not a very good file format for "natural" images, like photographs, although it works very well for images with few colours, like logos, charts or small icons.

![]() Note...

Note...

This section is very similar to the one about prediction and error encoding in the first part of this primer. It's presented here due to its relevance for some forms of lossy compression.

As you may have noticed when looking at an audio waveform, nearby samples usually have similar values. This is a result of the fact that analog audio waves are continuous, and that digital audio normally uses a pretty high sampling rate (ex., 44.1 kHz for CDs, 48 kHz for DAT and DV, etc.). The higher the sampling rate, the less the amplitude will change between samples (for the same waveform).

So what happens if, instead of storing each sample using an "absolute" value, we store it as the difference (also called "the delta") from the previous sample? Let's say our source data consists of this (where each value is a 16-bit integer):

[2050] [2060] [2069] [2077] [2083] [2086] [2087] [2087] [2086] [2083] [2077]

If we replace all values (except for the first one, of course) with the difference from the previous value, we get this:

[2050] [10] [9] [8] [6] [3] [1] [0] [-1] [-3] [-6]

When this type of representation is used for audio, it is known as DPCM, or "Differential Pulse Coded Modulation".

![]() Note...

Note...

The term PCM (Pulse Coded Modulation) refers to the digital encoding of the original audio waveform as a series of discrete amplitude values (in other words, when people talk about "uncompressed digital audio", it usually means PCM).

How does this help us? Well, the difference values are all quite small, which means that we don't need 16 bits to store each of them. In this case the difference is never greater than 10. That fits in 5 bits (which can represent values from -15 to 15).

![]() Note...

Note...

If you are not familiar with binary notation, here is a quick explanation: the number of different values that can be represented by N bits is equal to two raised to the power of N. So two bits can represent up to 4 values, three bits can represent up to 8 values, four bits can represent up to 16 values, and so on. Eight bits (one byte) can represent up to 256 different values, as we had seen in the first part of this article. The actual values represented by those bits depend on the encoding format. A typical format when both positive and negative integers are required is to use the first bit to indicate the sign and the rest of the bits to represent the value, as a standard binary number. So a sequence of 5 bits such as 10010 would represent the value -2. Look up "binary numbers" on the internet for more details.

In other words, if the difference between consecutive samples stays within that range, all samples (except for the first one) can be "compressed" from 16 bits to just 5 (a reduction of nearly 70%). That is a pretty big "if", though.

As the sound frequency increases, so does the difference between consecutive sample values (unless we also increase the sampling frequency, but that would increase the total amount of data, and we're trying to reduce it). If we limited our differential information to 5 bits per sample (on a 16-bit amplitude scale), that would limit us to frequencies below 6 Hz. Since human speech is typically between 400 and 3000 Hz, it's pretty obvious that 5-bit DPCM won't be very useful.

Real-world implementations of DPCM typically use about 12 bits to encode the difference between samples on a 16-bit source. This means the value of a sample can differ from the previous one by plus or minus 2047. At a 48 kHz sampling rate, that means we can only encode frequencies up to 1.5 kHz in a 0dB normalised file.

Human speech can contain frequencies above 1.5 kHz, though, so 12-bit DPCM is going to have issues with speech that is both high and loud. And it's going to be virtually useless for something like music, where much higher frequencies are common (and removing them makes the sound almost unrecognisable).

This led to the creation of ADPCM (Adaptive DPCM). Instead of storing the difference from the previous sample, ADPCM applies a simple prediction algorithm to the data to "guess" the value of the next sample, and then stores the difference between that prediction and the actual sample value (in other words, it stores the prediction error).

For example, if sample #1 had the value 10000 and sample #2 had the value 15000, the encoder might predict that sample #3 is going to have the value 20000. If the real sample turns out to have the value 19835, the encoder simply stores the value -165.

As long as the same prediction algorithm is used for compression and decompression, and as long as the prediction error is lower than the range of allowed difference values, the original sound can be recovered.

ADPCM encoders typically use 4 to 8 bits to store each sample difference, which translates to a reduction of 50 to 75% in the total volume of data (compared to uncompressed 16-bit PCM).

It's important to note that the prediction algorithm will not always be able to guess the value well enough to land within a margin that can be corrected by the difference data (which, in the case of ADPCM, has a fixed size). For this reason, ADPCM is technically a lossy algorithm. Typically, the higher the frequencies and amplitudes in the original audio data, the more likely it is that there will be a loss of information, and possibly a perceptible loss of quality.

![]() Note...

Note...

Some image compressors are based on similar techniques, as described in the first part of the article. When used for lossless compression, the compressor has to ensure that the difference data can store values big enough to "cover" for any prediction error. This means the error values it stores must be allowed to be as big as the original values themselves (for situations where the predictor got it completely wrong). It's then up to the entropy encoding algorithm to turn the more frequent values into shorter codes. See the first part of this primer for more details.

ADPCM is rarely used these days, except for some telephony equipment. The increasing processing power (and decreasing cost) of modern chips has made it viable for nearly all devices to use more complex (but also more efficient) algorithms, such as the ones described in the next section.

It's time to meet...

Say hello to the mother of (nearly) all modern lossy compression algorithms.

Using a discrete Fourier transform limited to real numbers, we can translate our source data into frequency space, actually approaching the ideal Karhunen-Loève transform for signals based on limits of stochastic processes with the Markov property...

No, come back, I promise I won't do that again.

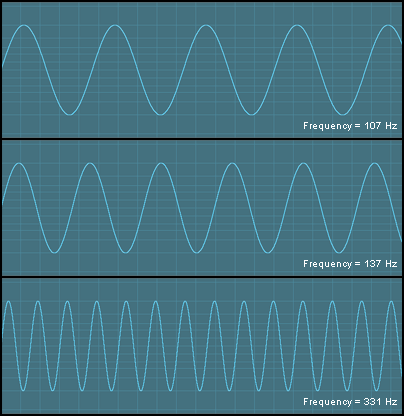

Let's start with something slightly simpler. Take these audio waveforms:

Fig. 15 - Three sinusoidal waveforms with different frequencies

Each of those three waveforms can be described using three values: its amplitude, its frequency and its phase. The amplitude is how high it goes, the frequency is how many cycles it goes through per second and the phase is what point of the cycle it starts at.

Assuming we use 16 bits (2 bytes) to define each of those values, this means that each of those waveforms can be encoded into 6 bytes, which means the three can be encoded into 18 bytes. Considering that, if they were recorded to a DAT tape or some other digital format, they would need approximately 96 thousand bytes per second each, I'd say that's pretty good "compression", no? Of course, we'd still need to define their length, add file headers describing the type of wave (sinusoidal, square, triangular, etc.), and so on, but some red tape is always necessary, and it doesn't change the fundamental issue: describing those sounds by their "wave properties" takes up a lot less space than storing them as a series of PCM samples.

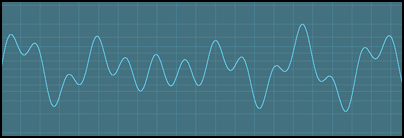

Now look at this waveform:

Fig. 16 - A complex waveform

Looks considerably more complex, right? But actually there is less to this waveform than meets the eye: it is simply the result of mixing the three signals shown above. And this means that, technically, it could also be encoded in just 18 bytes (plus the file headers, etc., which aren't relevant right now).

Wouldn't it be great if there was some way to transform a digitised audio file into a list of frequencies that, when mixed, could be used to rebuild the original sound?

This is where something called DCT comes in. DCT stands for "discrete cosine transform", and refers to a group of mathematical functions that can be used to do just that: express a signal in terms of a sum of sinusoids with different frequencies and amplitudes.

![]() Note...

Note...

There are eight standard types of DCT, usually identified by a roman numeral after their name (DCT-I up to DCT-VIII). The one most commonly used for lossy image compression is the DCT-II, usually called just DCT. The formula for this DCT is the one shown at the top of this section. Lossy audio compression normally uses the MDCT (a sub-type of DCT-IV). DCT functions belong to a bigger family of mathematical functions called Fourier transforms.

I used audio as an example because it's easy to visualise audio waveforms as a 2D bitmap. It's not so easy to visualise the frequencies that make up a coloured, two-dimensional image, but images can also be expressed (or at least approximated) as a set of interfering frequencies. Here the frequency is not related to time (as in the case of audio signals), or to the wavelength of each colour, but rather to space. It refers to the way the image's channels (ex., brightness) vary along the axes, from one pixel to the next (and the next, and so on).

The JPEG (Joint Photographic Experts Group) image format, for example, follows these steps to encode an image:

First the image is converted to the YCbCr colour space, since this generally improves compressibility of "natural" images, and makes it possible to use chroma downsampling. Some encoders skip this stage and encode the image directly in RGB, but that generally results in poorer performance (a lower compression ratio, or lower quality for the same compression ratio).

Then the chroma channels are optionally downsampled using a 4:2:2 or 4:2:0 pattern, depending on the level of compression intended.

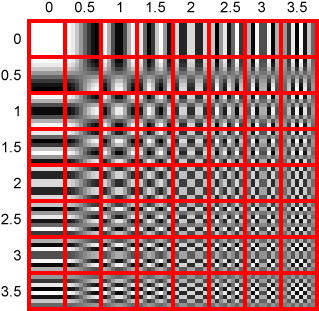

Each channel of the image is then divided into 8x8 pixel blocks, and each block is converted into frequencies by the discrete cosine transform (DCT). The encoder doesn't know in advance which frequencies are actually present in the block, though, and searching for every possible frequency can be very slow, so each block is encoded as a linear combination of 64 different patterns (seen in the following figure), each made up of progressively increasing horizontal and vertical frequencies.

Fig. 17 - JPEG DCT patterns (enlarged)

Since we are trying to encode 64 pixels (an 8x8 block) and there are 64 patterns, each of which must be assigned a coefficient, this does not actually reduce the amount of data necessary to represent the image. On the contrary; DCT coefficients will generally need more than 8 bits to represent the original information. Typical values are between 9 and 12 bits, depending on the precision of the encoder. This means that the data volume can actually increase by 50% at this stage.

That is reversed, however, when the DCT coefficients are quantised. The global quantisation level is typically controlled by the "quality" or "compression level" slider in the software used to save the file. The fact that the image is represented by a series of frequency coefficients, however, means the encoder can use different quantisation levels for different frequencies. Human vision is pretty good at detecting subtle variations of colour or brightness across large areas (ex., the colour of the sky in a photograph of the sunset), corresponding to the lowest frequencies, but it's not so good at evaluating the exact magnitude of high frequency intensity variations (ex., it's very hard to distinguish a dark gray line from a black one if both are over a white background). That characteristic of human vision is obvious when looking at figure 14 (the butterfly, above). The quantisation is very noticeable in areas with smooth colour gradients, but almost unnoticeable in areas with a lot of small detail. JPEG encoders take advantage of this fact and use higher quantisation for higher frequencies (essentially this means the DCT coefficients corresponding to higher frequencies are divided by bigger numbers).

The quantised data is then sorted in a diagonal zig-zag order, starting at the top left corner of the matrix (where the highest values will usually be, since they used less quantisation) and ending at the lowest right corner (where most of the values are likely to be zero, or very close to zero). This reordering improves the next step, which is to apply an optimised RLE algorithm to the sequence of values. Finally, the data is entropy-encoded, either with the Huffman algorithm or using arithmetic coding (most software uses Huffman coding, which is much faster, although not quite as efficient).

![]() Note...

Note...

RLE and entropy coding were described in the first part of this compression primer. Understanding them is not required to understand this part, though; the really important concepts here are the DCT and the variable quantisation.

Different types of DCT and MDCT (a similar transform) can be found in many modern lossy compression algorithms, such as the ones used by the JPEG and MP3 file formats. It's also used by various video codecs, like DV, MJPEG, and MPEG (including its many versions and derivatives).

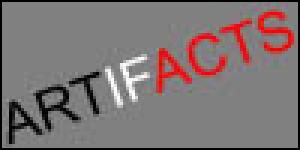

As you have probably noticed, JPEG images with high levels of compression sometimes display some "artifacts". These artifacts tend to be more evident near high-contrast edges, and they often have a "block-like" appearance, where each "block" is a square with 8x8 pixels. Now that you know how JPEG uses DCT, the appearance of those artifacts should make more sense. They are simply the result of high quantisation of high-frequency coefficients (more likely to occur in areas with finer detail, such as high-contrast edges). If you look closely at those blocks, you might even notice that some of them look a lot like the patterns shown in the DCT pattern table, above (although most will be a mix of two or more of those patterns).

Good compressors will also take into acount an effect known as "frequency masking", where some types of variations become less noticeable if they are near other types of variations (for example, after a loud bang, our ears are less sensitive to subtle sound variations, so the audio immediately following an explosion or gunshot can be stored with less precision, improving its compression, and similar optimisations can be used in the compression of images).

The image below is a 3:1 reproduction of a file saved in JPEG with the "quality" slider set to 7 in Adobe Photoshop (which is a typical value used by photographs posted on the internet):

Fig. 18 - JPEG compression artifacts

Images with high-contrast edges or fine detail (such as letters, line drawings, certain types of logos, or images with dithering patterns) are not well-suited to DCT-based algorithms. Not only do they have a bigger tendency to show compression artifacts, but the files often end up being larger than if they had been saved in a lossless compression format, such as GIF or PNG. In other words, using JPEG for that kind of image is a lose-lose situation.

When DCT is used for audio (ex., MP3, Ogg Vorbis, etc.), the quantisation coefficients are distributed differently. Although we can see low (spatial) frequencies very well, we cannot hear low (temporal) frequencies, so both very high and very low audio frequencies can use higher quantisation than the midtones (we are most sensitive to frequencies around 3 kHz). DCT-based audio compression can generally achieve a reduction of 25% or more, compared to ADPCM, for the same sound quality. Even at a 10:1 compression ratio (compared to the source PCM data), DCT-compressed audio files can sound almost indistinguishable from the original.

Two other compression techniques deserve to be mentioned, although they are not as common as DCT-based algorithms.

First, there is fractal compression, which works by looking for similar shapes (at different scales and angles) within an image. Despite much promise and the excitement always caused by the word "fractal", the fact is fractal compression has failed to deliver. Although very efficient in theory, real-world fractal compressors tend to produce worse results than JPEG, except at extremely high compression levels (where JPEG images look terrible and fractal-compressed images look slightly less terrible). In addition to that, fractal compression is slow and requires a great deal of human intervention. It is sometimes called "the graduate student algorithm", because the only way to get good compression is to lock a graduate student in a room with a computer until he manages to tweak the fractal compressor for the image you are trying to encode. The poor real-world performance of fractal compressors might be due to the fact that the process is covered by several patents, which limits the number of people and companies allowed to use and improve this technology.

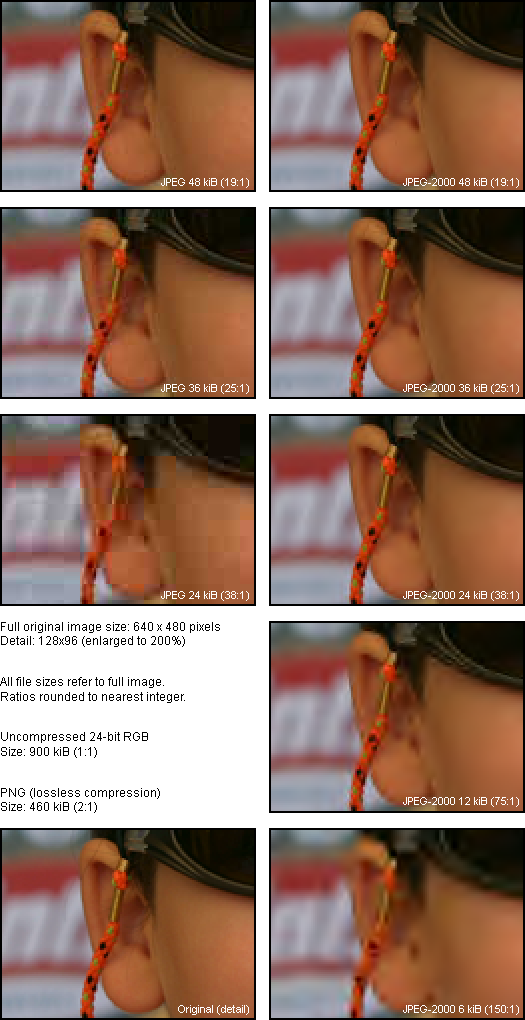

The second method worth mentioning is wavelet-based compression. This encodes a signal as a series of scaled and translated copies of a finite (or fast-decaying) waveform. This concept actually has some similarities with the notion of a fractal, but it is different from fractal compression, since it doesn't look for visual self-similarity. It also has some similarities with DCT, but is a considerably more complex process, and therefore slower to compute. Wavelet compression is used by the JPEG-2000 format (file extension ".JP2" or ".J2C"), which was meant to replace the original JPEG format. However, most web browsers don't have built-in support for JPEG-2000, and the format does not have support for EXIF data (used by digital cameras to store information about each photo directly in the JPEG file), so it hasn't quite caught on. Typically, JPEG-2000 files are 20% to 50% smaller than JPEG images, for the same visual quality. They also tend to have less obvious compression artifacts at very high compression levels (they look blurry instead of blocky). Support for JPEG-2000 can be added to browsers and image editing applications by installing the appropriate plug-ins.

The following figure shows a comparison between JPEG and JPEG-2000 at different file sizes.

Fig. 19 - JPEG vs. JPEG-2000

The JPEG encoder used was unable to produce a file smaller than 24 kiB. The 12 kiB JPEG-2000 file actually has slightly more detail than the 24 kiB JPEG, and with far less offensive artifacts. Even at 6 kiB (despite having less detail) the JPEG-2000 version has a more "natural" look. Also worth noting is that the 48 kiB JPEG-2000 file, despite lacking any noticeable compression artifacts, does not contain all the detail of the original (compare the three stray hairs near the top of the ear). Still, for a compression ratio of about 20:1 (10:1 compared to PNG), I suppose we can live with some hair loss.

All the images above are reproduced at a 2:1 ratio (with no interpolation) to make the compression artifacts easier to see. The final image file was saved in PNG format (lossless), so that no other compression artifacts were introduced.

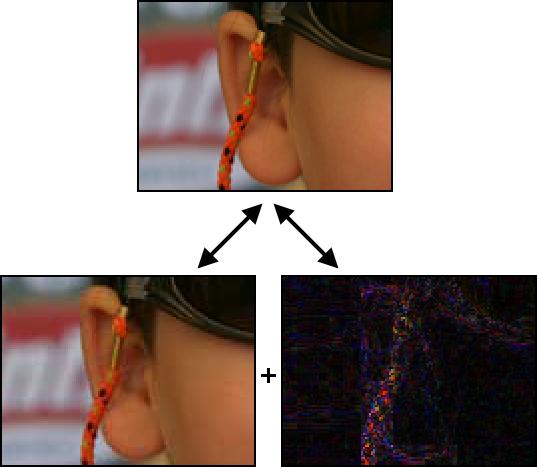

I mentioned that some of the techniques used in lossy compression can be also used to improve lossless compression. Naturally, I don't mean just those very lucky and very rare cases where the lossy compression algorithm manages to describe the source data perfectly.

A common way to evaluate the differences between a (lossily) compressed version of an image and its original is to subtract the pixel values of one from the other and display the result as a new image (sometimes called "the delta"). In that image, darker areas indicate smaller differences, brighter areas indicate bigger differences. The following figure shows the difference between the original image and a JPEG-compressed version at a 25:1 ratio.

Fig. 20 - Lossy compression + delta = original data

The contrast of the delta image has been increased and all images are enlarged to 200% to improve visibility. Also, the delta image shown here is unsigned, since computer monitors can't display negative colours (the actual data would contain both positive and negative numbers). Notice how the differences are bigger near edges or areas with small detail (which correspond to higher frequencies), and smaller in low-contrast areas.

Let's use our example from the previous sections (the lossy "LCS" gradient), and see how the difference data is calculated and stored:

| Original data (11 bytes): | [2] [4] [8] [12] [14] [18] [19] [24] [25] [28] [30] |

| Compressed data (4 bytes): | LCS [2] [11] [3] |

| Uncompressed data (11 bytes): | [2] [5] [8] [11] [14] [17] [20] [23] [26] [29] [32] |

| Difference (11 bytes): | [0] [-1] [0] [1] [0] [1] [-1] [1] [-1] [-1] [-2] |

Using only the compressed data and the difference from the original (sometimes called "error data"), we could now rebuild the source data exactly (losslessly), simply by adding the two. Of course, as you probably noticed, adding the size of the compressed data and the difference data, we actually end up with 15 bytes, which is more than the original, so this isn't exactly a good idea. But you probably also noticed that the difference data doesn't vary much. In fact, here it varies only across 4 values (-2, -1, 0 and 1), and since our maximum error margin was defined as "3" during the compression, we know that the difference value is never going to be lower than -3 or higher than 3. In other words, the difference value will always be one of the following seven: -3, -2, -1, 0, 1, 2 or 3. These can be encoded using three bits (one bit for the sign, two bits for the value).

This means we don't actually need 11 bytes to store the difference data for 11 pixels, we only need 33 bits, which is barely over 4 bytes. Let's say we store it in 5 bytes, so we don't have to deal with "hanging bits".

With these 4 bytes of compressed data plus 5 bytes of difference data (a total of 9 bytes), we can rebuild the 11 bytes of source data exactly. This might not seem all that impressive, but remember that this is data that did not follow any "neat" rule and did not have any repeated sequences, so redundancy-based algorithms would not have been able to compress it at all. Also, remember that we are looking at an extremely small amount of data (just 11 pixels). In a real image (typically over 500 thousand pixels), using a more advanced algorithm, the lossy compression stage would be a lot more efficient.

On top of this, we could apply one or more lossless compression algorithms (like RLE, LZ or entropy coding) to the difference data, which would bring its size down further (since it can only have seven different values, repeated values and repeated patterns are very common, making it quite compressible).

As mentioned in the first part of this article, several lossless media file formats, such as PNG (image) and FLAC (audio) use a similar technique (prediction plus error coding) to achieve better compression than generic (redundancy-based) lossless algorithms. That is a special case of the lossy + delta approach, that works one byte (or one sample) at a time, instead of using lossy compression on the entire file and calculating the delta at the end.

WavPack (a relatively unknown but very versatile open-source audio compression format developed by David Bryant) allows the user to save two separate files: one containing the lossily-compressed audio (similar to an MP3 file), and the other containing the difference information that can be applied to the former to rebuild the exact original data. This makes it possible, for example, to transmit several clips of lossily-compressed audio, listen to them, pick the ones that are going to be used, and then transmit the "correction" (difference) files only for those clips. Another possibility would be to keep one's music collection in this dual file format at home (for listening on a high-end sound system), copying only the lossily-compressed files to a portable music player, to save space.

So, there you have it: lossless lossy compression. Sort of.

And so we come to the end of the second part of this series. I hope you found it interesting and accessible.

I deliberately left out a lot of technical details, and used (fictitious) simplified examples to explain some concepts, because, as algorithms get more complex and as file formats start to use multiple algorithms, the amount of space required to describe them accurately grows exponentially. Unless you are a programmer, you probably don't need to worry about those details, but if you are curious, you'll be able to find a lot of information on the internet.

![]() Note...

Note...

How much was this guide worth to you? More than your collection of Picasso originals? Less than those cigarettes you're trying to quit? You decide. Click the link below and enter the amount you consider fair. You will need a valid credit card or a PayPal account.

PAY WHAT YOU LIKE!

If you have any questions or comments about this guide, post a message in the forum. If you quote this guide on other websites, in your e-mails, academic papers, etc., please include a link to this page.

The next article will describe how some of these techniques can be applied to video and how other (video-specific) techniques are used by modern encoders. It will also include a list of links to web sites with more information about compression and media file formats.

- Part 1 - Lossless data compression

- Part 2 - Lossy compression of stills and audio

- Part 3 - Video compression

The sound frequency sensitivity chart and DCT pattern table used in this article were copied from public domain sources.